Browsers and Certification Authorities, the battle continues.

2019 was a busy year, with growing differences of opinion between browsers makers and Certification Authorities, an explosion in the number of phishing sites encrypted in HTTPS and significant progress on the depreciation of TLS v1.0.

Discussions on extended validation, more generally the visual display of certificates in browsers, and the reduction of the duration of certificates have taken a prominent place. None of these discussions are over, no consensus seems to be emerging, 2020 is looking like a busy year. Time to look ahead…

Will the fate of Extended Validation be determined?

2019 saw the main browsers stop displaying the famous green address bar with the padlock and the name of the company, in favor of a classic and unique display, no longer taking into account the authentication level of the certificates:

However, discussions are still ongoing at the CA/B forum level, as well as within the CA Security Council. Both of these certificates regulatory bodies will be looking in 2020 for an intuitive way to display identity information of websites.

Historically approved by everyone, including the financial industry and websites with transactions, EV (the acronym for Extended Validation) was Google’s target in 2019. Other browsers, under the influence of Google, between Mozilla financed by Google and Microsoft and Opera based on Chromium open source, have followed in this direction. Only Apple continues to display EV.

For browsers, the question is whether or not TLS is the best way to present the authentication information of websites. It seems that it is not. Google assumes that it is not up to Certification Authorities to decide the legitimate content of a website and wants the use of certificates for encryption purposes only.

Of course, the Certification Authorities see things differently. One can certainly see a purely mercantile reaction, EV certificates are much more expensive. One can also wonder about the purpose of authentication beyond encryption. The answer seems to lie in the staggering statistics of phishing websites encrypted with HTTPS. Browsers have for the moment imposed an encrypted web indeed… but no longer authenticated!

2020 will therefore be the year of proposals from Certification Authorities: providing better authentication, including identification of legal entities, following the path of PSD2 in Europe… One thing is certain, identity has never been so important on the Internet and it is up to all interested parties to find a solution, including browsers to find a way to display strong authentication of websites. To be continued…

Certificates with a shorter duration: towards one-year certificates

825 days, or 27 months, or 2 years, the maximum duration currently allowed for SSL Certificates. However, since 2017 and a first attempt within the CA/B forum, the industry is moving towards a reduction of this duration to 13 months (1 additional month to cover the renewal period).

Google and browsers came back in 2019 with another vote submitted to the CA/B forum, again rejected but by a smaller majority. The market is on the move. Players like Let’sEncrypt propose certificates with a duration of 3 months, others want to keep long durations to avoid overloads of intervention on servers. One thing is certain, the market does not have the automation systems in place yet to make the management and installation of certificates easier, a delay of one or two more years would otherwise be preferable, or at least judicious.

But all this is without counting on Google threatening to act unilaterally if the regulator does not follow… certainly in 2020.

From TLS 1.0 to TLS 1.3: forced advance

Expected in January 2020, Microsoft, Apple, Mozilla, Google and Cloudflare have announced their intention to depreciate support for TLS 1.0 (a protocol created in 1999 to succeed SSL 3.0, which has become highly exposed) and TLS 1.1 (2006), both of which are currently suffering from too much exposure to security flaws.

While TLS 1.2 (2008) is still considered secure today, the market seems to be pushing for TLS 1.3, the most recent version of the standard, finally released in the summer of 2018. TLS 1.3 abandons support for weak algorithms (MD4, RC4, DSA or SHA-224), allows negotiation in fewer steps (faster), and reduces vulnerability to fallback attacks. Simply put, it is the most secure protocol.

A small problem, however, is that many websites are taking action. At the beginning of 2019, only 17% of the Alexa Top 100,000 websites supported TLS 1.3, while just under 23% (22,285) did not even support TLS 1.2 yet. If the decision to depreciate older versions of the protocol is a good one, the form adopted by the major web players can be criticized, in particular by its unilateral nature. In the meantime, get ready, we are heading there.

The threat of quantum computing

Companies are talking more and more about quantum computing, including Google. But the reality is, while quantum will impact our industry, it certainly won’t be in 2020, or for at least a decade. There are still many questions that need to be answered, such as: What is the best algorithm for quantum resistance? No one has that answer, and until there is a consensus in the industry, you are not going to see any quantum solutions in place.

IoT is growing, but the lack of security remains a problem

IoT is a success, but a number of deployments are being delayed due to a lack of security. In 2020, cloud service providers will provide or partner with security companies to provide a secure provisioning and management of devices, as well as an overall secure IoT ecosystem, for their customers.

The regulatory frameworks for IoT manufacturing and deployments will most certainly be led by the EU, although we will also see an increase in the US. Attacks, compromises and IoT hacking will, unfortunately, continue. In addition, security standards will not be met and we will not even come close to a higher percentage of secure devices. Why is that? Original Equipment Manufacturers (OEMs) are still not willing to pay the costs involved or pass them on to consumers for fear of losing sales.

China’s encryption laws will create a lot of uncertainty

In recent years, part of the digital transformation of the world has led to the codification of rights and restrictions on data in national laws and regional organizations. PSD2, GDPR, CCPA, PIPEDA… a real headache for international companies faced with regulatory standards and compliance.

On January 1, 2020, China’s encryption law was due to come into force. An additional data and… still unclear to those doing business in China. Clarification is still needed on several fronts. For example, commercial encryption for international companies must be approved and certified before it can be used in China – but this certification system has not yet been created. Similarly, there is uncertainty about the key escrow and the data that must be made available to the Chinese government. This has led to a wave of speculation, misinformation and, ultimately, overreaction. Given the opacity of parts of the new Regulation, many companies are opting for a wait-and-see approach. This is a wise tactic, assuming your organization does not have an experienced Chinese legal expert.

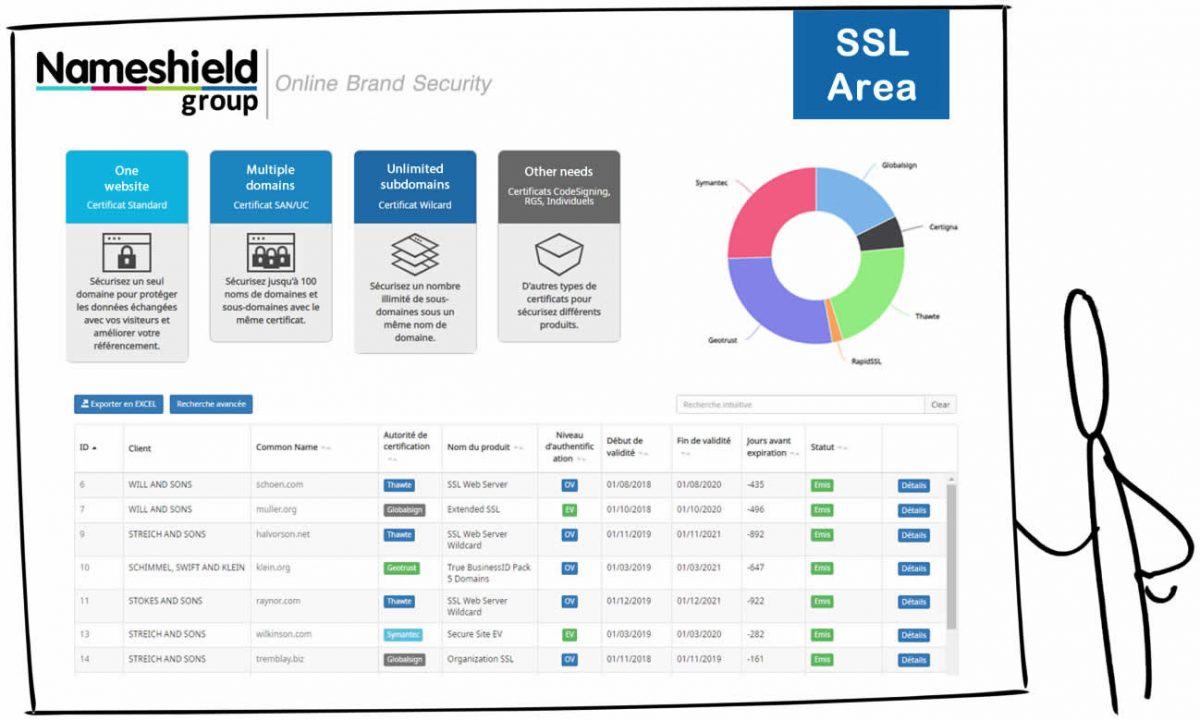

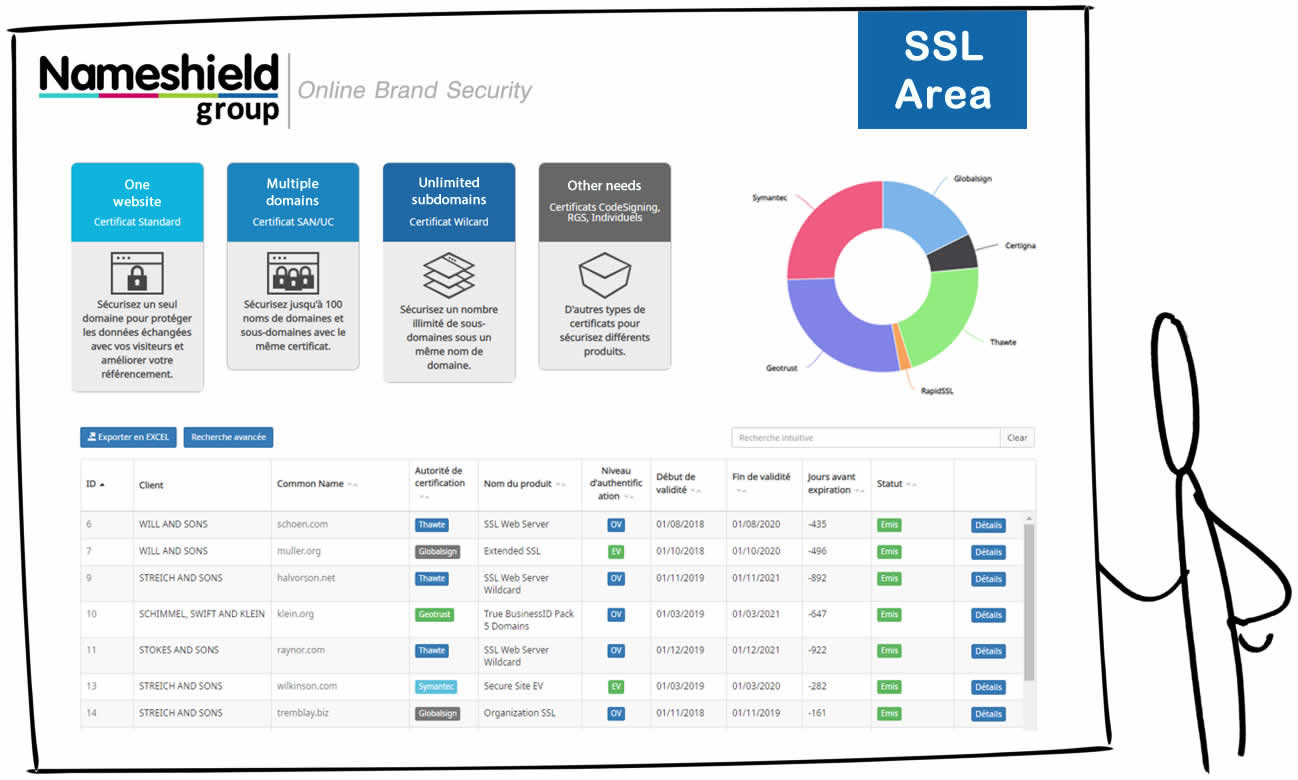

In conclusion, the certificates industry continues to change. Nameshield’s certificates team is at your disposal to discuss all these topics.

Best wishes for 2020.